AI Gesture Control: What Applications Would You Choose to Control with the Force?

Smart homes and Internet of Things (IoT) have been big buzzwords recently and rightfully so. The rise of Artificial Intelligence (AI) has opened many possibilities in this industry but also resulted in ever growing privacy concerns.

Camera-based sensors perform well for many applications, including gesture recognition. However, they are not infallible due to lighting issues and background clutter. A bigger problem is that they are not completely suitable for the home environment since the prospect of being watched constantly is not appealing to many. I for one draw the line at camera based smart toilets!

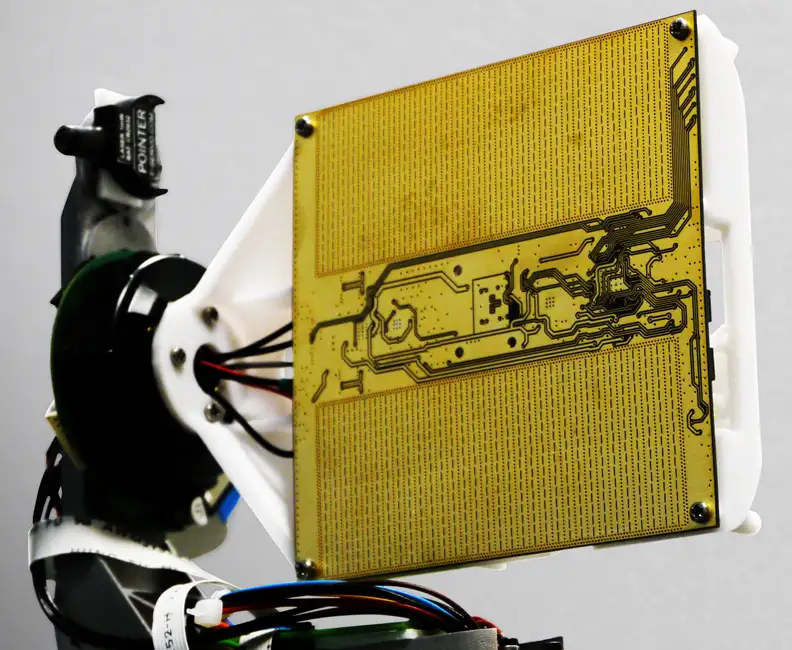

As an alternative we have developed a privacy preserving gesture recognition system using a Texas Instruments mmWave radar instead. This work was first presented at IEEE WCCI 2020.

Unlike camera based sensors where an individual can be seen in frames, a radar sensor “sees” the world in terms of time varying radar reflections which do not form an image and cannot be used to distinguish individuals. Since the sensor is unaffected by the light conditions it is a prime candidate for use in applications such as fall detection in care homes. An alternative use case would be the use of gesture control for household appliances. Imagine using gesture control to turn the lights on, but the system doesn’t work in low lighting conditions!

For data collection a TI IWR6843ISK-ODS radar sensor was used which operates between 60 and 64 GHz (mm-wave). It consists of 3 transmit and 4 receive antennas forming a total of 12 virtual antennas and allows for a 120 degree of both azimuth and elevation field of view.

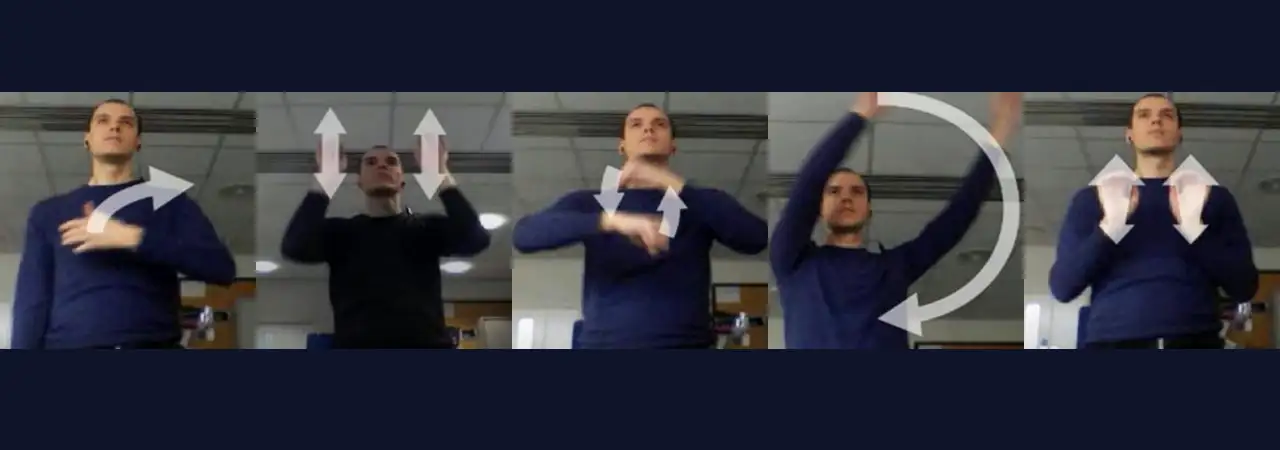

Using a series of hand gesture datapoints, I wanted to see if it was possible to use machine learning to map each gesture to a different control signal. A total of 5 candidate gestures were examined and can be seen in this image.

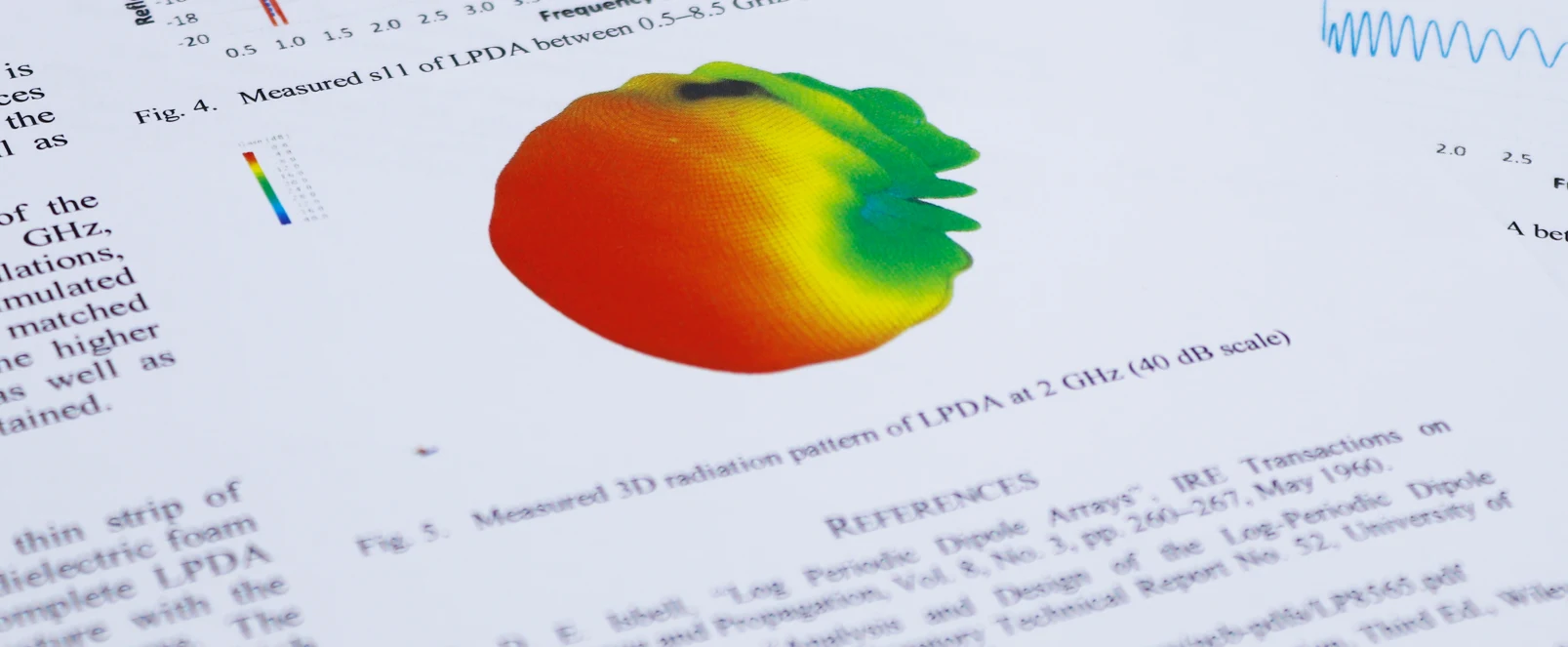

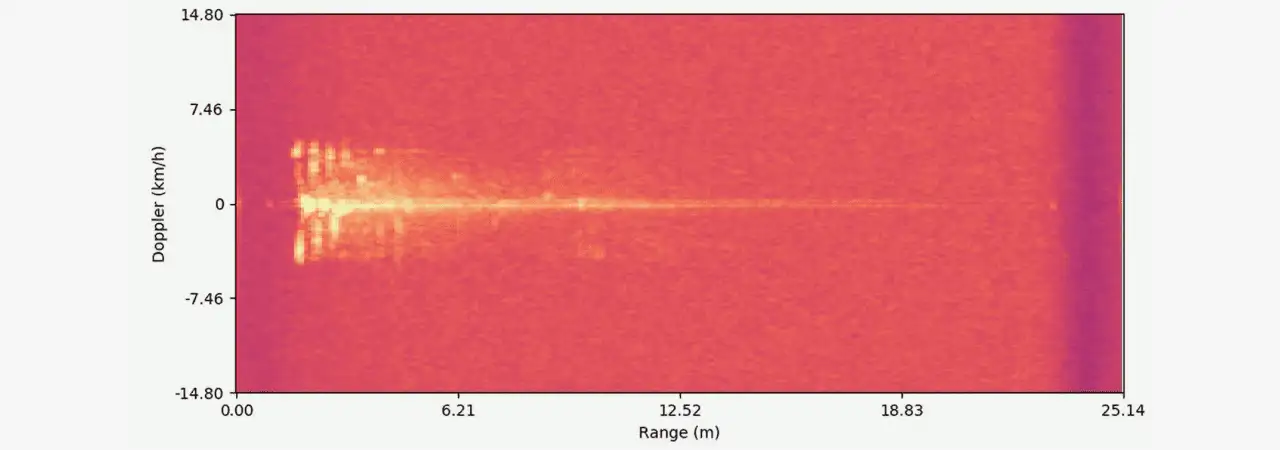

This image shows a range-Doppler heatmap of the average over all the virtual antennas representing how the radar “sees” the individual. The image shows a single individual performing a bicycle like pedalling motion with their hands in front of the radar. The x-axis represents the range an object is from the radar while the y-axis contains the radial velocity of the object towards (-) or away from (+) the radar.

The bright point at the zero Doppler value is the static body while the points slightly closer to the radar moving towards or away from the radar are the arms. All the points observed at ranges behind the body are due to reflections from different surfaces (i.e. floor, ceiling, walls, table, etc) which are common indoors.

An incoherent sum across all virtual antennas is performed and all non-zero points are extracted. The signal magnitude, range, velocity, azimuth angle, x, y, and z coordinates are calculated for each detected point and used to train a machine learning model. A Convolutional Neural Network (CNN) and a Type- 2 Fuzzy Logic based classifier were trained and used to classify between the five gestures.

This gesture classification was then transformed into a control signal for any household appliance. I decided to try it out in real time to control a PowerPoint presentation as shown in the image below. The bicycle hand-motion and forward motions were mapped to the next and previous slide respectively while to exit the presentation a circular motion was used.

The work is still a few steps away from “using the force” but we will get there.

What applications would you like to control with The Force?

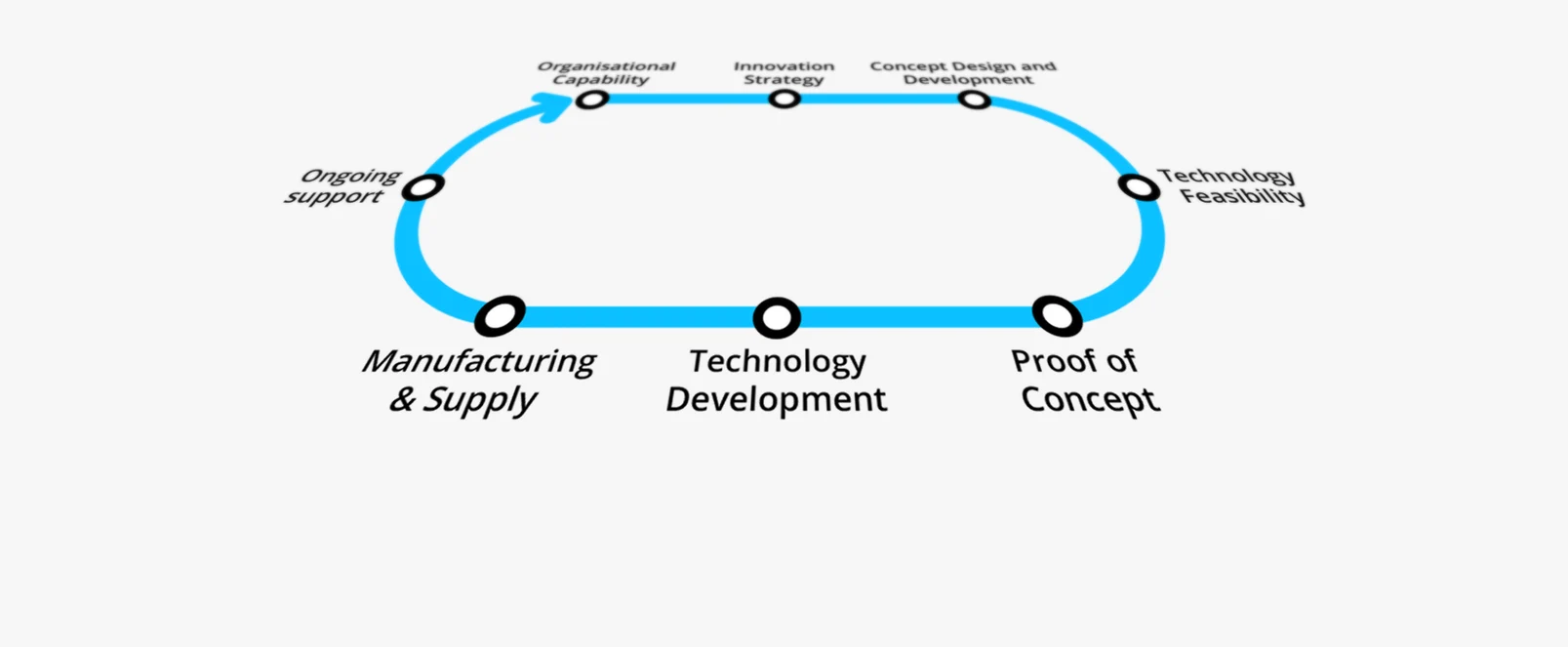

Technology Platforms

Plextek's 'white-label' technology platforms allow you to accelerate product development, streamline efficiencies, and access our extensive R&D expertise to suit your project needs.

-

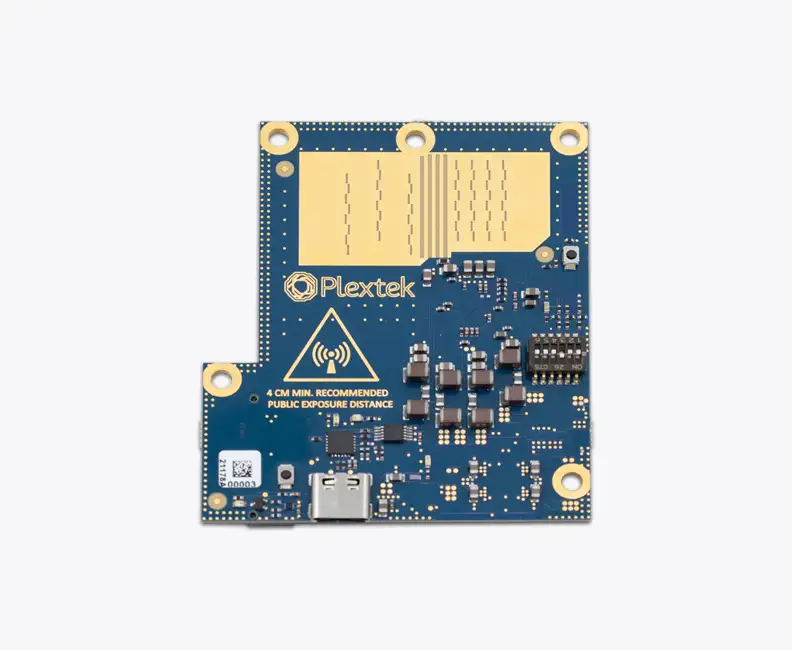

01 Configurable mmWave Radar ModuleConfigurable mmWave Radar Module

Plextek’s PLX-T60 platform enables rapid development and deployment of custom mmWave radar solutions at scale and pace

-

02 Configurable IoT FrameworkConfigurable IoT Framework

Plextek’s IoT framework enables rapid development and deployment of custom IoT solutions, particularly those requiring extended operation on battery power

-

03 Ubiquitous RadarUbiquitous Radar

Plextek's Ubiquitous Radar will detect returns from many directions simultaneously and accurately, differentiating between drones and birds, and even determining the size and type of drone

Downloads

View All Downloads- PLX-T60 Configurable mmWave Radar Module

- PLX-U16 Ubiquitous Radar

- Configurable IOT Framework

- MISPEC

- Cost Effective mmWave Radar Devices

- Connected Autonomous Mobility

- Antenna Design Services

- Plextek Drone Sensor Solutions Persistent Situational Awareness for UAV & Counter UAV

- mmWave Sense & Avoid Radar for UAVs

- Exceptional technology to positive impact your marine operations

- Infrastructure Monitoring